The 8 Sacred Cows of Systems Thinking

This isn't a blog post, but more of a blog-collection. 8 common things that systems thinkers like to say that are bupkis. We call them sacred cows of systems thinking because it is almost blasphemous to disagree with them, even though most have a much bigger bark than bite. Click on the links below (in the right column) to red the blogs on each sacred cow.

And, one thing is for sure, dare to challenge any one of these sacred cows 🐮, and you'll take a rash of shit 💩for it. People will come out of the woodwork to defend them with great fanfare and handwaving 👋. But stay steady, the logic of nature 🌱 and science 🔬 eventually win them over, but it can take time ⌛. Ug. 😛

In the list below, click on the links to go to articles on the sacred cow and its scientifically-valid replacement.

The 8 Sacred Cows of Systems Thinking

- Sacred CowThe whole is more than the sum of the parts (W>P).

- True or False? False

- Scientifically-valid Replacement The whole is always precisely equal to its parts (W=P).

- Brief Explanation: W>P is a trick that works because you artificially decide not to define relationships as parts; a misconception of emergence.

- Sacred Cow All systems have a purpose

- True or False? False

- Scientifically-valid Replacement The purpose of a system is what it does (POSIWID).

- Brief Explanation: We often impose our aspirational or biased purposes on systems. What the system actually does is its purpose because its structure determines its behavior.

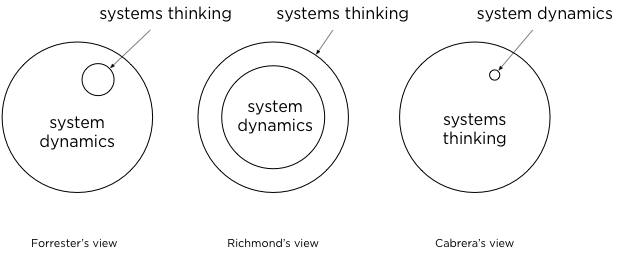

- Sacred Cow Systems thinking is the systems dynamics framework [or insert any other tribal framework here].

- True or False? False

- Scientifically-valid Replacement Systems Thinking is an emergent property (an outcome) of four simple rules (DSRP).

- Brief Explanation: Systems thinking isn't something you do, its something you get (an emergent property) by following simple rules. DSRP rules have been empirically validated.

- Sacred Cow Systems thinking is holistic (variant: systems thinking is anti-reductionist).

- True or False? False

- Scientifically-valid Replacement Systems thinking is balanced thinking (both/and holistic and reductionistic, not either/or).

- Brief Explanation: Systems Thinking is “middle way” thinking. It is incoherent to think about the whole (holism) without thinking also about the parts (reductionism), and vice versa.

- Sacred Cow It's all about relationships.

- True or False? False

- Scientifically-valid Replacement It's not all about Relationships (R), it's also about Distinctions (D), Systems (S) and Perspectives (P).

- Brief Explanation: Relationships are not enough. DSRP is present in all systems.

- Sacred Cow Everything is connected.

- True or False? False

- Scientifically-valid Replacement Everything is connected, or not.

- Brief Explanation: How things are connected and not connected, is critically important to system behavior. In nature, networks where everything is connected to everything else are rare. Everything is interconnected, eventually. But it is not the case that everything is connected and believing so leads to all kinds of erroneous conclusions.

- Sacred Cow There is no system "out there." (variant: Systems are not real, they are only mental models).

- True or False? False

- Scientifically-valid Replacement There is a system out there, it just may be different from your mental model of it.

- Brief Explanation: Systems exist in the real world, it's our job to try to get our mental models in alignment with them (a.k.a., "science").

- Sacred Cow It's all about the context.

- True or False? False

- Scientifically-valid Replacement The context of stuff is other stuff.

- Brief Explanation: Context is a lazy/vague term. It is the enemy of systems thinking because it causes us not to see all the other stuff (complexity).

In one sense, I agree with those who lament that semantic debates lack utility. Purely semantic debates, based entirely on opinion, are not terribly useful. Yet, the distinctions (above) are not semantic—there are fundamental empirical differences between the sacred cow and the scientifically-valid replacement. Because these differences strike at issues of a fundamental nature, it is critically important for the systems community to understand them, because all misunderstandings stem from mistakes made at these "origin assumptions." All of the "scared cows" I mention above are empirically testable, not a matter of opinion. In all cases, the distinction being made between the "scared cow" and the replacement increases deep understanding of systems.

One of the things that all scientists (systems scientists included) must get better at is making scientific concepts accessible to the public (because the public funds our science) without dumbing them down to the point of losing fidelity with the scientific concept. All of the sacred cows I mention are guilty of over-simplifying or being somewhat loose with language. Thus, when these tropes get repeated and reinforced, they provide the public with a false understanding. When the public commits to these tropes so thoroughly that they become "sacred cows" it becomes even worse.

I update this list from time to time when a new trope raises its ugly head.

.png?width=150&height=150&name=CRL%20GOAT%20Logo%20(4).png)